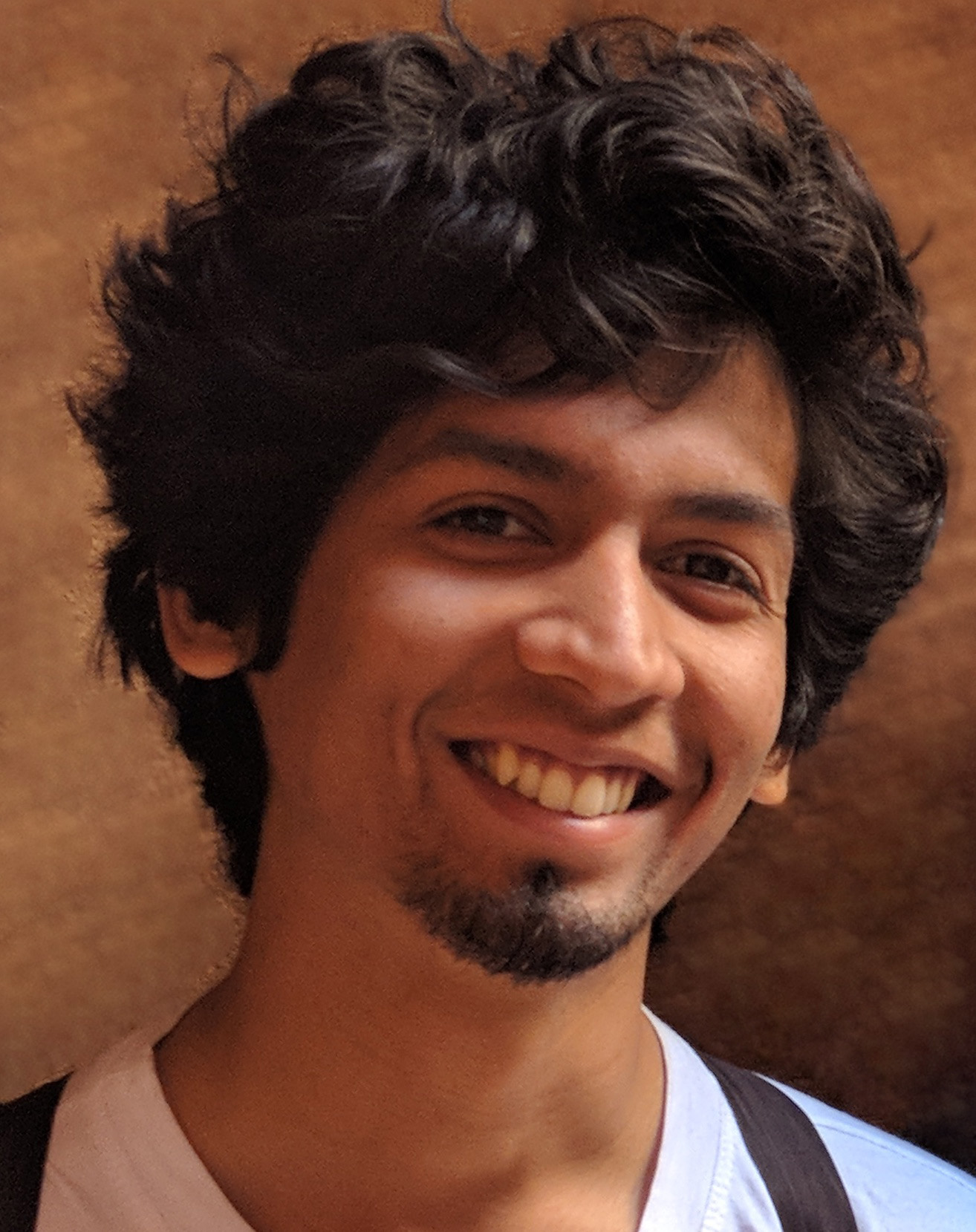

👋 Hi, I’m Akash. I work on meeting transcription as a Sr. Applied Scientist at Microsoft. I’m on an R&D team that ships state-of-art speech recognition models to Azure APIs, as well as powers Microsoft products like Copilot-assisted recap of meetings in Teams. In this role I need to be on top of fast-moving research, exercise judgement in connecting user experience with technology readiness and keep experimenting until we can ship high-quality models that improve the user experience.

🐥🗣️ I recently open-sourced tinydiarize: an extension of OpenAI’s Whisper ASR model for speaker diarization, that is runnable on Macbooks/iPhones via an integration in whisper.cpp.

🐋 At the 2020 Microsoft global hackathon, I also co-founded OrcaHello, an open-source AI system that monitors and issues 24x7 expert-validated alerts for SRKW orcas near 3 hydrophone locations in the Pacific Northwest. You can listen to last month’s detections here!

Broadly speaking, I’ve experience with designing/debugging/deploying various audio-based deep learning systems. I like wearing multiple hats and have most enjoyed contributing to both design and engineering of products that make magic. You can find more details on my CV or find me on Twitter/X/Elon-app.

If you’re curious, carry on to read a brief ramble about my unconventional career arc thus far :)

-

[2018- ] 🤖 Started my tech career at Microsoft. As someone with a non-traditional background, i’ve gotten to pick up industry scale “software and servers” skills, working with large codebases, distributed training, and data pipelines handling hundreds of terabytes. I’ve got to collaborate with expert PhDs in speech processing while also contributing to the evolution of the entire speech stack to one that’s more deep-learning-native. Co-curricularly, I made my first major OSS contribution thanks to OpenAI’s Whisper ASR model and also co-founded an award-winning hackathon project that leverages AI to alert for orcas in the Pacific Northwest.

-

[2016-2018] 🤓 At Stanford's MS&E program I initially aimed to become a PM or data scientist, but was soon drawn to more deeply understand and build technology. I balanced marketing, strategy & design classes with self-assembled technical electives. I delved into math (deep learning, digital signal processing), code (databases, implementing a heap allocator in C), and built a neural text-to-speech system that was appreciated by Prof Richard Socher. They were two intense years, but gave me confidence in my ability to learn and bridge different worlds. I look forward to the dots connecting in the future :)

-

[2015-2016] 🤘 Took a gap year before Stanford and entered the code world through stints at startups in Bangalore. As a data scientist at Ather Energy, I contributed to an early roadmap for vehicle intelligence features on its pioneering EV. I dipped my feet in the dark arts of deep learning, self-studying the classic CS231n at my roommate’s AI for radiology startup. I met some amazing people, got to witness the magic of crafting great, deep tech products in India, and have been inspired ever since.

-

[2011-2015] 🧪 In a previous life as a Chemical Engineer at IIT Madras i’ve been at a hydrofracking site with 5000 horsepower pumps, and tinkered with chemicals at a tobacco lab for nicotine extraction. My minor in control systems (linear algebra, stats, signal processing) gave me a foundation for machine learning math. In hindsight, enjoying working on things I can touch and feel likely drew me to deep learning and i’m glad that with AI, computing is headed in that direction too!